We’re approaching a point where the signal-to-noise ratio is getting close to one — meaning that as the pace of misinformation approaches that of factual information, it’s becoming nearly impossible to tell what’s real. This guide teaches journalists how to try to identify AI-generated content under deadline pressure, offering seven advanced detection categories that every reporter needs to master.

As someone who helps newsrooms fight misinformation, here’s what keeps me up at night: Traditional fact-checking takes hours or days. AI misinformation generation takes minutes.

Video misinformation is so old that it predates modern AI technology by decades. Even despite the basic technical limitations of early recording equipment, it could create devastating false impressions. In 2003, nanny Claudia Muro spent 29 months in jail because a low-frame-rate security camera made gentle motions look violent — and nobody thought to verify the footage. In January 2025, UK teacher Cheryl Bennett was driven into hiding after a deepfake video falsely showed her making racist remarks.

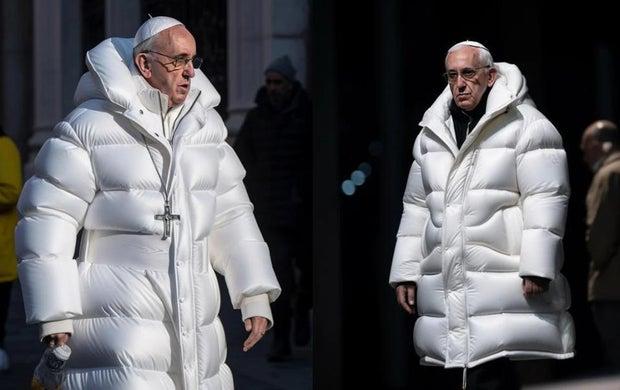

AI-generated image purporting to show Pope Francis I wearing a Balenciaga puffer jacket. Image: Midjournery, Pablo Xavier

This viral image of Pope Francis I in a white Balenciaga puffer coat fooled millions on social media before being revealed as AI-generated using Midjourney’s text-to-image prompt. Key detection clues included the crucifix hanging at his chest held inexplicably aloft, with only a white puffer jacket where the other half of the chain should be. The image’s creator, Pablo Xavier, told BuzzFeed News: “I just thought it was funny to see the Pope in a funny jacket.”

Sometimes the most effective fakes require no AI at all. In May 2019, a video of House Speaker Nancy Pelosi was slowed to 75% speed and pitch-altered to make her appear intoxicated. In November 2018, the White House shared a sped-up version of CNN correspondent Jim Acosta’s interaction with a White House intern, making his arm movement appear more aggressive than in reality.

I recently created an entire fake political scandal — complete with news anchors, outraged citizens, protest footage, and the fictional mayor himself — in just 28 minutes during my lunch break. Total cost? Eight dollars. Twenty-eight minutes. One completely fabricated political crisis that could fool busy editors under deadline pressure.

Not long ago, I watched a seasoned fact-checker confidently declare an AI-generated image “authentic” because it showed a perfect five-finger hand instead of six. But now, that solution is almost gone.

This is the brutal reality of AI detection: the methods that made us feel secure are evaporating before our eyes. In the early development of AI image generators, poorly drawn hands — like extra fingers or fused digits — were common and often used to spot AI-generated images. Viral fakes, such as the “Trump arrest” images from 2023, were partly exposed by these obvious hand errors. However, by 2025, major AI models like Midjourney and DALL-E have significantly improved at rendering anatomically correct hands. As a result, hands are no longer a reliable way to detect AI-created images, and those seeking to identify AI art must look for other, subtler signs to spot AI-generated content.

The text rendering revolution happened even faster. Where AI protest signs once displayed garbled messages like “STTPO THE MADNESSS” and “FREEE PALESTIME,” some of the current models produce flawless typography. OpenAI specifically trained DALL-E 3 on text accuracy, while Midjourney V6 added “accurate text” as a marketable feature. What was once a reliable detection method now rarely works.

The misaligned ears, unnaturally asymmetrical eyes, and painted-on teeth that once distinguished AI faces are becoming rare. Portrait images generated in January 2023 showed detectable failures all the time. The same prompts today produce believable faces.

This represents a fundamental danger for newsrooms. A journalist trained on 2023 detection methods might develop false confidence, declaring obvious AI content as authentic simply because it passes outdated tests. This misplaced certainty is more dangerous than honest uncertainty.

Introducing: Image Whisperer

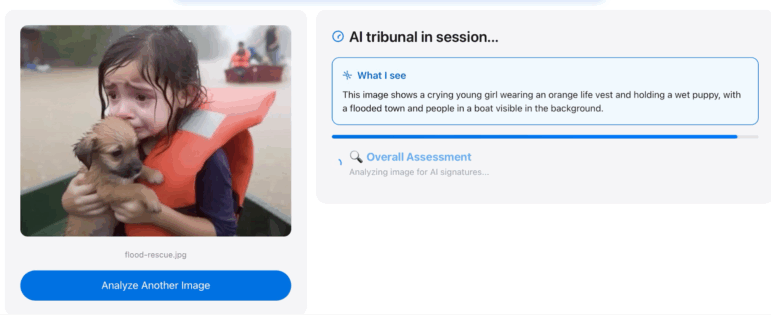

Analysis of an AI-generated image purporting to show a girl being rescued after flooding in the US. Image: Henk van Ess

I began wondering if I could build a verification assistant for AI content as a bonus for this article. I started to email experts. Scientists took me deep into physics territory I never expected: Fourier transforms, quantum mechanics of neural networks, mathematical signatures invisible to the human eye. One physicist explained how AI artifacts aren’t just visual glitches — they’re frequency domain fingerprints.

by Henk van Ess, Global Investigative Journalism Network

Related posts

Magazine Training International’s mission is to encourage, strengthen, and provide training and resources to Christian magazine publishers as they seek to build the church and reach their societies for Christ.